PR Industry view

January 2026

Tom Lawrence

Founder & CEO - MVPR

Most companies are getting human-in-the-loop wrong.

We've been responding to AI compliance questionnaires from enterprise clients for the past six months - detailed 25-page documents asking us to explain exactly where humans intercept AI decisions, how we prevent hallucinations, and how we're preparing for the EU AI Act.

Here's what surprised me: most companies in our space aren't getting these questions yet. But the ones that are? They're scrambling.

Whether you agree with the EU AI Act policies or not, the reality is this - enterprise clients are already demanding AI transparency and governance frameworks. And most teams aren't ready.

The trust problem isn't about using AI. It's about proving you're using it responsibly.

After building our compliance framework, I'm seeing three critical mistakes companies make with human-in-the-loop systems:

Mistake #1: Treating human oversight as a checkbox

Most teams add a "human reviews it" step and call it done. But effective human-in-the-loop requires defining which decisions need human judgment and why. You need to map every workflow to identify where AI assistance ends and human authority begins. Without this mapping, you have no defensible framework when clients or regulators ask for documentation.

Mistake #2: No clear decision boundaries

Ask most teams "which decisions does AI make autonomously?" and you'll get vague answers. This ambiguity creates risk -- both for quality and for compliance. We built a simple framework: Strategic planning = 100% human. Research synthesis = AI-assisted, human validated. Final client approval = 100% human. Every stage has explicit checkpoints where a human must review before proceeding.

Mistake #3: Assuming compliance = slowing down

The opposite is true. Clear human-in-the-loop systems make teams faster because everyone knows their role. AI handles research and first drafts. Humans handle strategy, fact-checking, and client relationships. No confusion, no bottlenecks, no wondering "should AI do this or should I?"

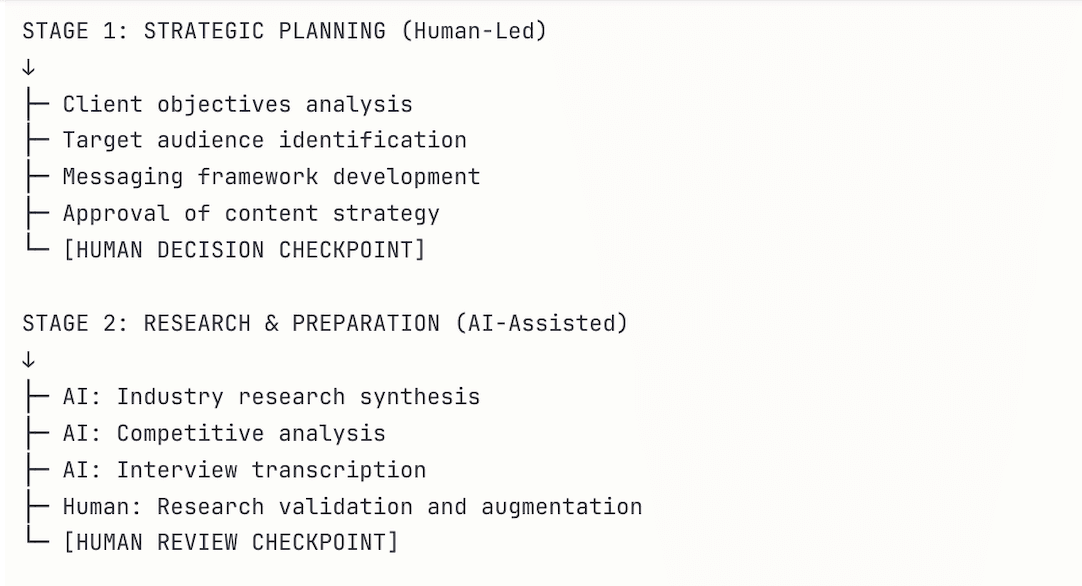

Here's how to structure human-in-the-loop systems properly:

The key is creating mandatory checkpoints where humans must review, validate, or approve before AI work can advance. Think of it as a multi-stage workflow where each stage has defined AI and human responsibilities.

Our content creation workflow has six stages with five mandatory human checkpoints:

Stage 1: Strategic Planning (Human-Led) -- Client objectives analysis, target audience identification, messaging framework development. AI isn't involved here. [HUMAN DECISION CHECKPOINT]

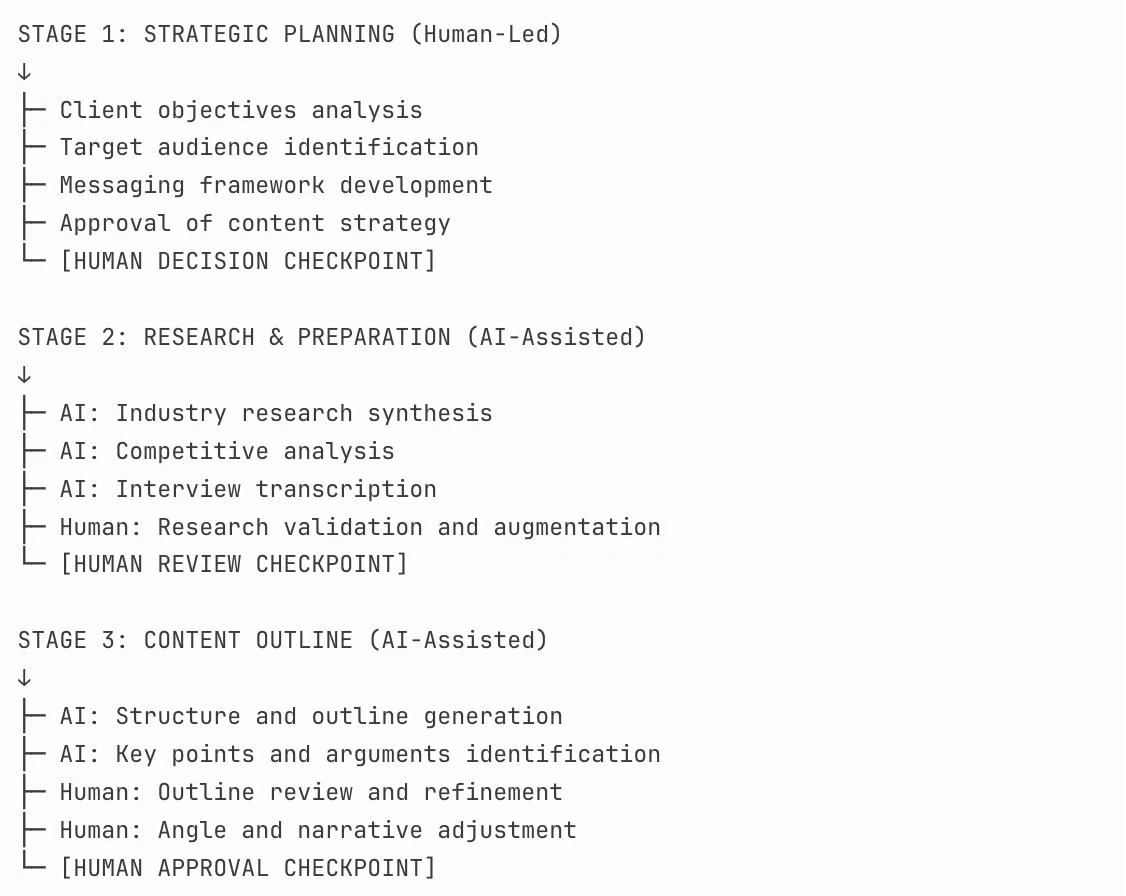

Stage 2: Research & Preparation (AI-Assisted) -- AI synthesizes industry research, competitive analysis, and interview transcriptions. Human validates all research and augments with additional context. [HUMAN REVIEW CHECKPOINT]

Stage 3: Content Outline (AI-Assisted) -- AI generates structure and identifies key points. Human reviews outline, refines angle, and adjusts narrative before any drafting begins. [HUMAN APPROVAL CHECKPOINT]

Stage 4: Draft Creation (AI-Assisted) -- AI creates initial draft based on the approved outline. Human provides comprehensive editorial review, tone refinement, voice adjustment, and fact-checking against authoritative sources. [HUMAN QUALITY CHECKPOINT]

Stage 5: Quality Assessment (AI-Assisted) -- AI checks grammar, readability, and brand voice consistency. Human evaluates AI recommendations, decides which to implement, and applies final editorial polish. [HUMAN FINAL REVIEW]

Stage 6: Client Review (Human-Led) -- Human presents work to client with full explanation, incorporates feedback, manages revision process, and controls final approval. [CLIENT APPROVAL REQUIRED]

No AI output advances past any checkpoint without explicit human validation. No content reaches a client without human review. No content publishes without client approval.

This approach does three things simultaneously:

First, it builds trust with clients who need to demonstrate AI governance to their boards, legal teams, or regulators.

Second, it maintains quality because humans intercept AI work at the moments when human judgment matters most -- strategy, accuracy, tone, and client relationships.

Third, it creates clear documentation for compliance frameworks. When enterprise clients ask "where are your human oversight checkpoints?" -- we can point to specific stages with defined responsibilities.

For communications leaders managing teams, this isn't just about compliance.

It's about building systems that let your team move faster while maintaining the quality standards your clients expect. It's about being ready when your largest client asks: "How do you ensure AI accuracy and human oversight?"

The EU AI Act is coming into force throughout 2025-2026. But the real deadline is whenever your client sends you that 25-page AI compliance questionnaire.

If you're not ready to answer those questions in detail, with documented workflows and clear decision boundaries, you're already behind.

Why Us

We believe in a world where our PR services are transparent, and data supports our strategic decision-making. Where clients own relationships directly with journalists. And where PR teams use AI in the right way.